If you’ve kept a close eye on new content in the SEO community over the past year or so, one of the things I think you’ll notice is that search intent is on the minds of some really smart folks in our industry:

- AJ Kohn, https://www.blindfiveyearold.com/algorithm-analysis-in-the-age-of-embeddings

- Stephanie Briggs, https://www.slideshare.net/slideshow/searchdriven-content-strategy-mozcon-2018-105014924/105014924

- Dana DiTomaso, https://moz.com/blog/using-stat-for-content-strategy

- Kevin Indig, User Intent Mapping on Steroids (via Archive.org)

- Tom Rayner, https://raynernomics.com/predict-keyword-latent-intent/(via Archive.org)

(There are definitely others but these are some of my favorites).

Why is search intent suddenly in the SEO zeitgeist?

I believe it’s because Google’s search results have shifted in the past few years. Google has gotten significantly better at producing search results that deliver what the user is looking for without relying solely on classical SEO factors.

In the process, I think we’ve seen search intent become a more dominant factor on multiple types of search results, often outweighing classic SEO ranking factors like links, title tags, and other SEO basics. Historical domain-wide link factors (domain authority, basically) no longer carry the importance that we saw in 2010-2015.

I’m burying the lede a little bit here, but I’m really excited to share that in the spring of 2019, Content Harmony will be releasing a new SaaS toolset that helps SEOs & content marketers produce better content.

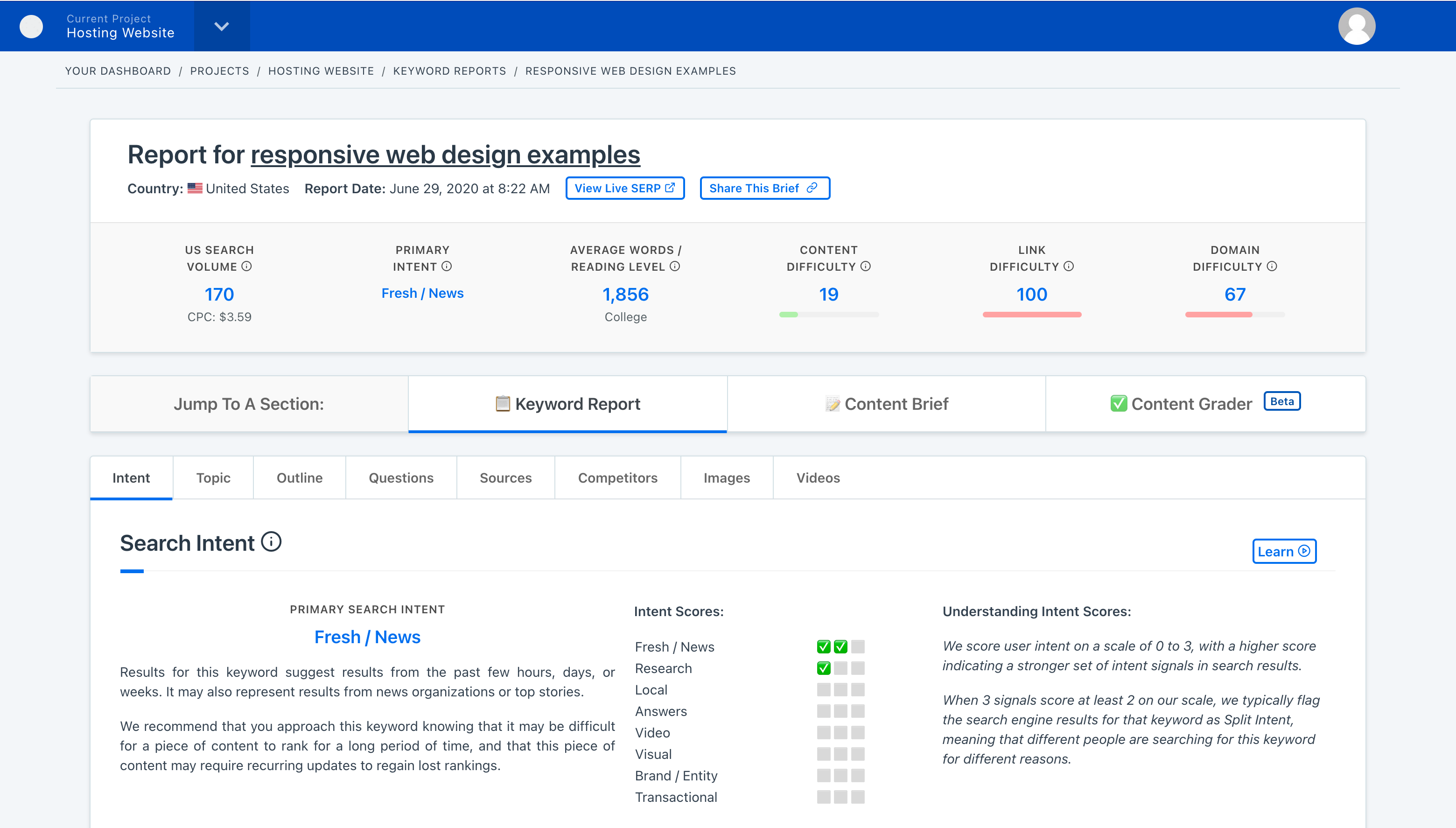

One of the great features in that toolset is a new scoring system we’ve developed for classifying search intent.

In this post, I’m going to lay out why we felt this was a key part of the puzzle in producing better content. I’m not satisfied with current methods of classifying search intent, and I’ll walk you the new classification and intent scoring process that we have built and show you some examples.

How We Talk About Search Intent Hasn’t Changed Much Since 2002

There are two common ways of labeling search intent that I’ve seen over the years.

The first classification that most of us have encountered is Navigational, Informational, or Transactional.

This methodology dates back to a 2002 peer-reviewed paper from Andrei Broder at Altavista (thanks Tom Anthony for this post with the Alta Vista paper link and some other thoughts on this system):

Broder’s paper defines each category as follows:

- Navigational: The immediate intent is to reach a particular site.

- Informational: The intent is to acquire some information assumed to be present on one or more web pages.

- Transactional: The intent is to perform some web-mediated activity.

The paper goes on to explain an interesting methodology they used to survey users to understand the intent behind their search.

Fast forward a few years. In the early 2010s Google starts referring to their own variation on this, talking about Know, Go, Do, & Buy “micro moments”. These are a bit more user friendly but basically cover the same categories that we see with Navigational, Informational, and Transactional.

I think these traditional classification systems are useful to help beginners understand how different searches are intended to yield different results, but they’re not very useful to SEOs and content creators on a day-to-day basis.

So where do these systems fall apart?

1) [Navigational / Informational / Transactional] Is Too Broad

As SEOs and content creators, we can produce much better content if we understand what a typical user is looking for. Unfortunately I think the systems that exist now are good for explaining search intent in hypothetical terms, but when you try to start applying them to keyword research and showing content creators and writers how to factor that into their content, I think their usefulness is diminished.

If you’re trying to understand the difference between a format-driven navigational search to reach Youtube, like [snowboard videos] or a branded navigational search, like [seattle metro routes], seeing them both labeled as Navigational isn’t super helpful. And grouping keywords together in this system is too generic to be very useful during the keyword research and mapping process.

2) Navigational / Informational / Transactional Don’t Account For Overlapping Intent

Additionally – I can think of many searches that fall into multiple categories. If somebody searches “amazon laptop deals”, would you label that Navigational (trying to reach Amazon) or Transactional (trying to buy a laptop)? So trying to categorize intent as a distinct category rather than a bunch of overlapping intents is problematic as well.

3) Search Intent Labels Are Often Guessed Manually Based Upon The Query Itself, Not The Actual Search Results

Most of the methods people use during the keyword research process involve adding modifiers that we expect to generate a specific intent. An SEO Strategist reviews a long list of keyword in the hundreds or thousands and fills in a column with Transactional, Informational, or Navigational.

For example, if users add words like “sale“, “cheap“, “deals“, or “for sale” to a query, we mark them as transactional and move on. If we encounter a query where it’s not clear what the intent is from glancing at the keyword, we mark it as Split Intent or with our best guess and then we keep moving down the spreadsheet. Who has the time to actually look at all of these SERPs and see what types of results are actually showing up, right?

(Interesting sidenote on manually assigning intent: that 2002 paper explicitly mentions that “…we need to clarify that there is no assumption here that this intent can be inferred with any certitude from the query.”)

Another approach is to look for a city name or modifier like “near me” to label a query as local – even though many of our non-modified search terms might be showing local packs at the top of the results.

No shame is intended if this sounds familiar – I’ve done it, too – but it’s a process that leads to mistakes and incorrect assumptions.

The experienced SEOs in the room should be able to think of many times when a search yielded a number of unexpected result types as Google seemed to shift the implicit intent they were trying to serve.

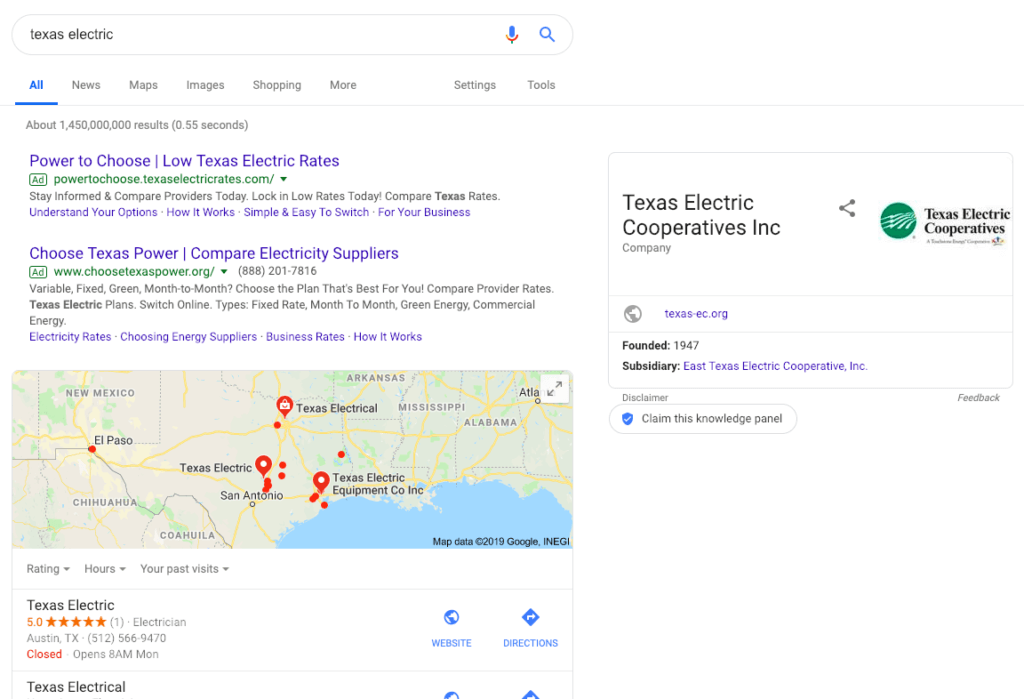

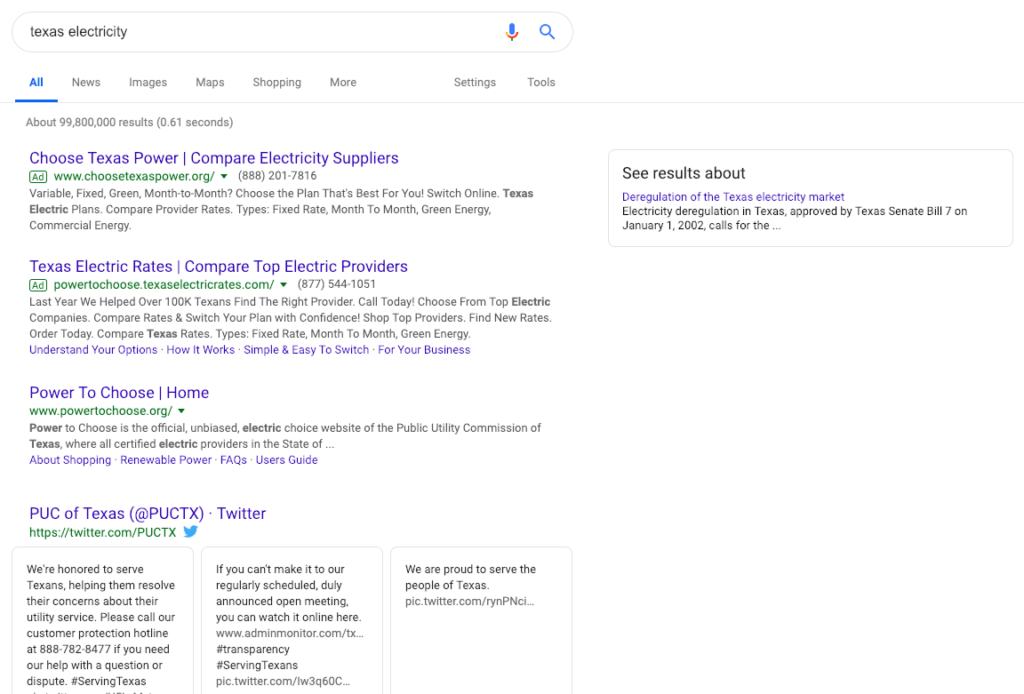

It’s also not hard to find shifts in intent that occur from query changes that don’t look significant when you’re looking at them in Excel sheet. Take a look at [texas electric] vs [texas electricity]:

The problem underlying these examples is that we’re trying to do this classification manually, rather than introducing tools into the process that can do it more reliably.

4) SEOs Don’t Need To Understand Intent The Same Way Search Engines Understand It

We (the SEO community) are not search engines and we are not trying to decide what results a user wants to see, so it’s OK for us to look at intent differently than a classical information retrieval model.

The goal we (Content Harmony) are trying to reach is not to understand the true intent(s) of all users. The goal of our tool is to understand the type of content that Google is looking to serve to users based upon what Google knows about the user’s intent, so that we can help SEOs & content creators make the best content for that keyword that they can. I’ve been really careful throughout this post to discuss “search intent”, not “user intent”, for basically that reason.

As SEOs, we mainly need to understand whether our content (and the format it’s in) fit the format that Google is looking to deliver.

How Content Harmony is Classifying Search Intent

OK, so, money where my mouth is – how exactly do I propose we measure search intent more usefully, and more reliably?

I believe that it’s more useful to classify search intent in a way that more closely aligns with SERP features. Here are the Intent Types we’ve begun using in our software (presented in no particular order):

1) Research Intent

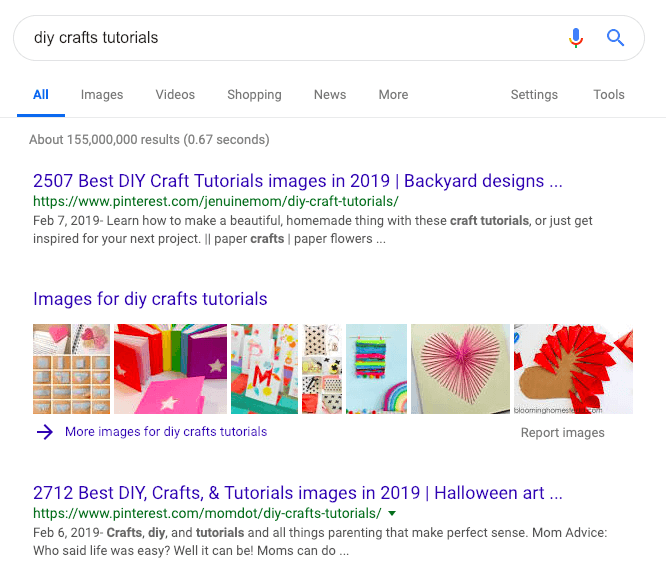

One of the most common result types, this would generally consist of search phrases that generate results like Wikipedia pages, definition boxes, scholarly examples, lots of blog posts or articles, in-depth articles, and other SERP features that suggest users are looking for answers or insights into a topic.

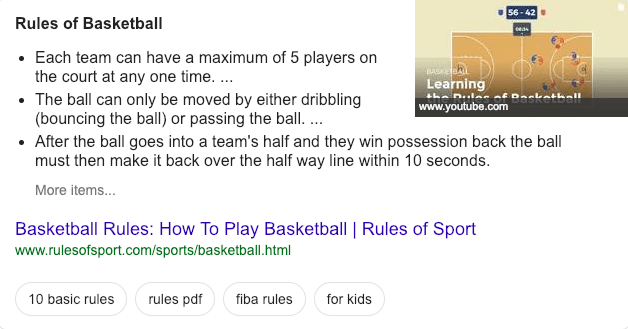

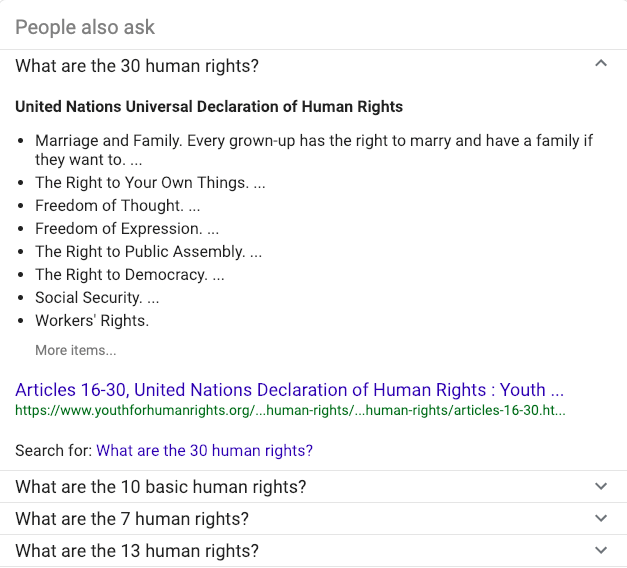

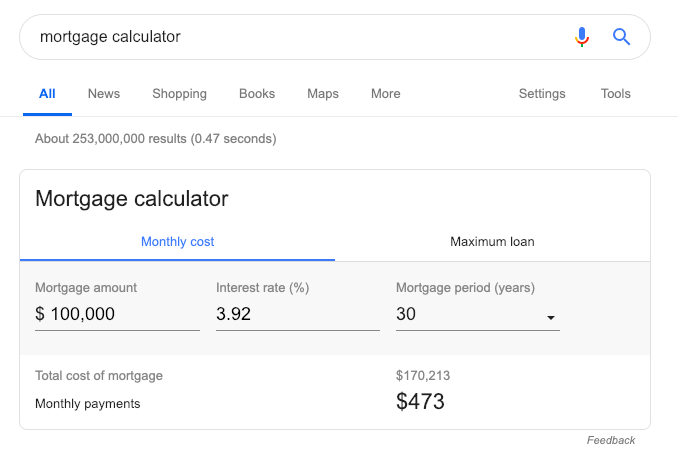

2) Answer Intent

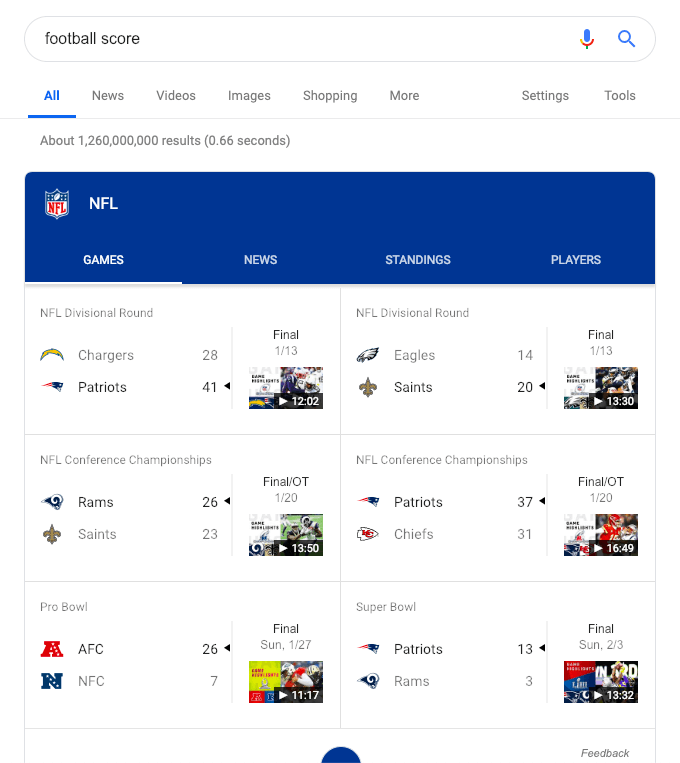

Slightly different from research, there are quite a few searches where users don’t generally care about clicking into a result and researching it – they just want a quick answer. Good examples are definition boxes, answer boxes, calculator boxes, sports scores, and other SERPs that feature a non-featured snippet version of an answer box, as well as a very low click-through rate (CTR) on search results.

3) Transactional Intent

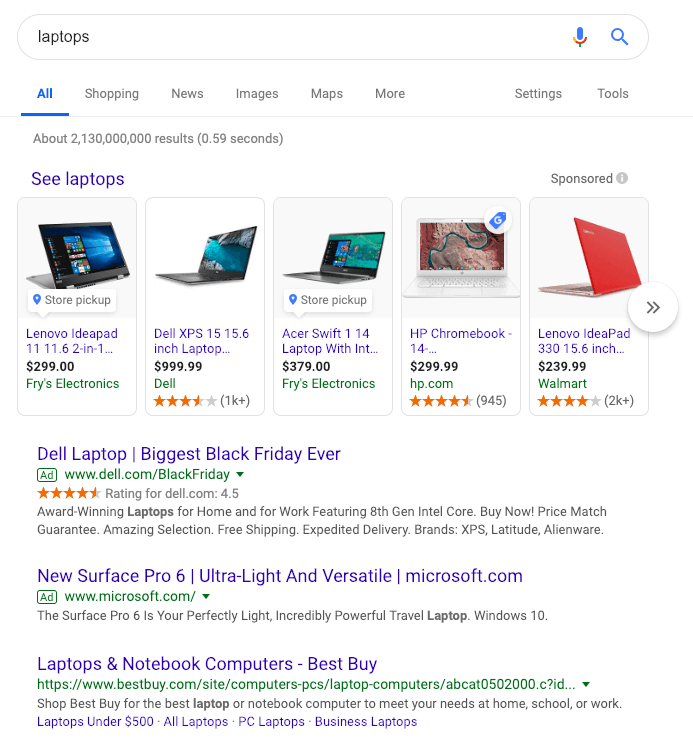

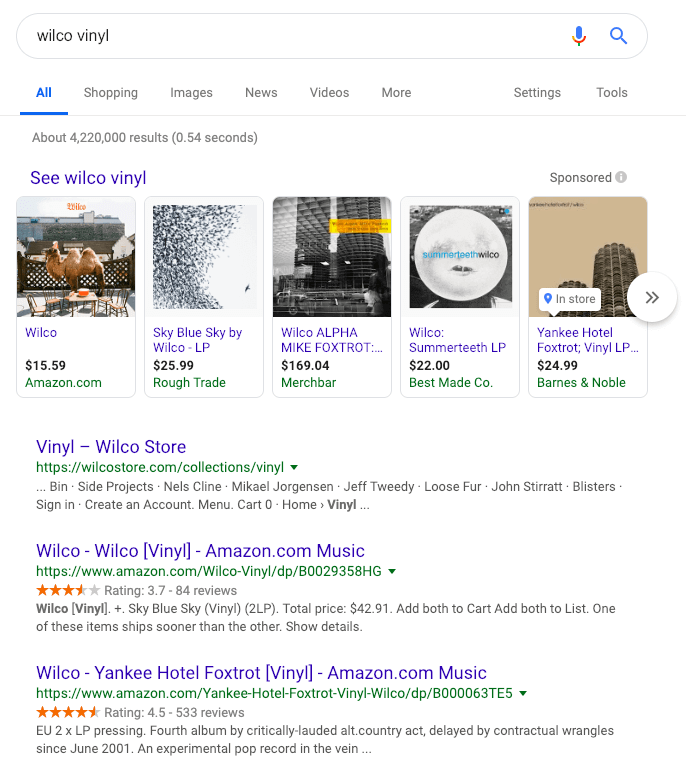

Users looking to buy products or research them are easy to detect, since Google tends to be aggressive with Shopping boxes and other purchase-intent features. Other easy methods of detection would include multiple results from known ecommerce players like Amazon or Walmart, results consisting of /product/ types of URL structure, and multiple results that feature eCommerce category/page schema markup.

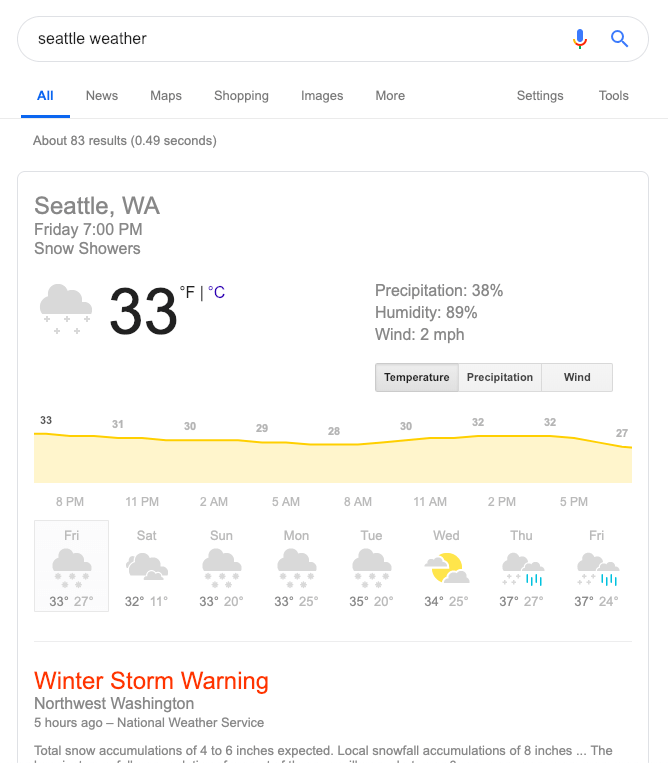

4) Local Intent

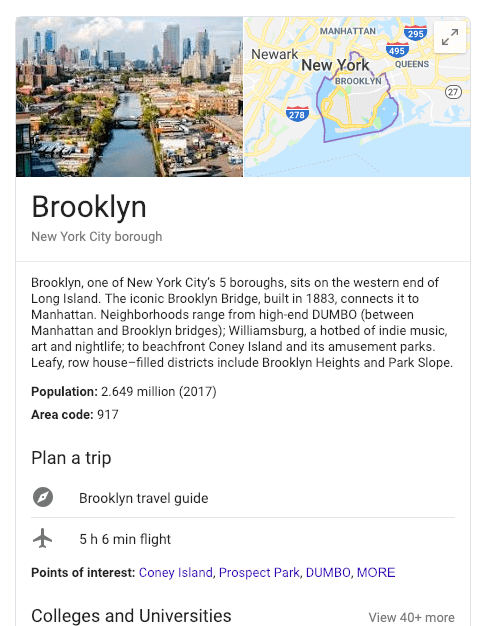

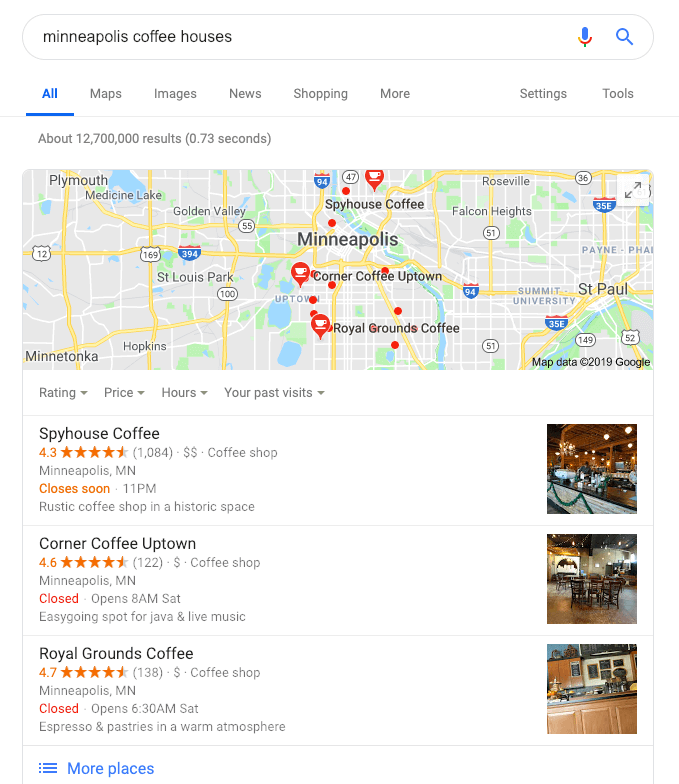

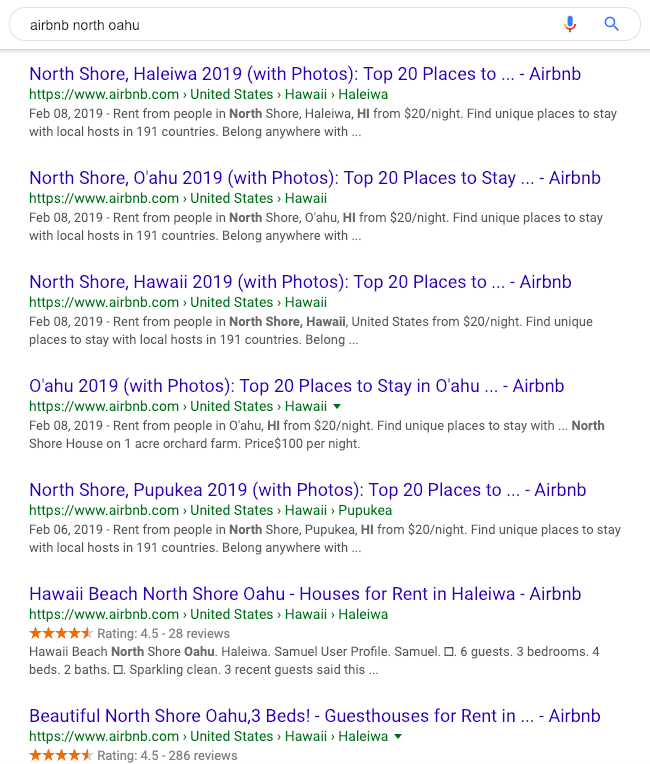

Dominated by a combination of local packs, geographic markers that have recently started showing up in organic results, Maps intent in the top navigation, and if there’s a way of detecting it, localized organic results for the IP being used to search.

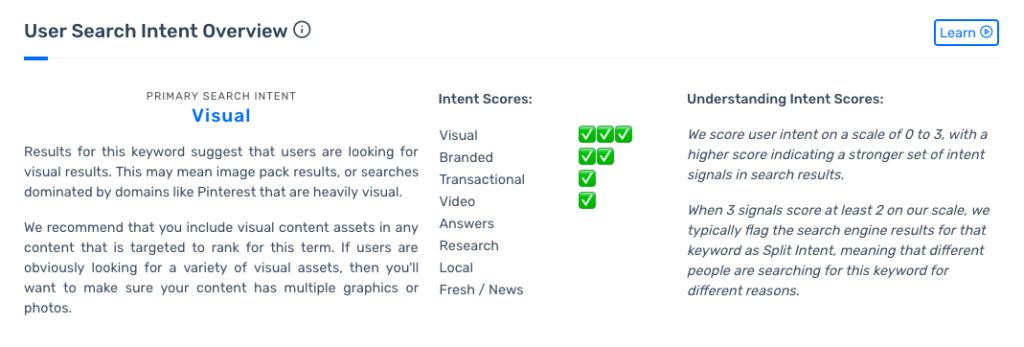

5) Visual Intent

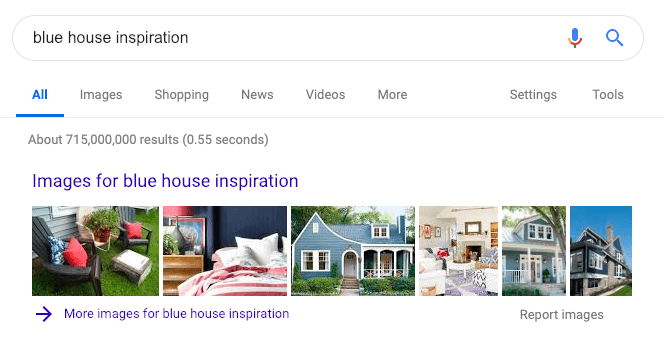

These are easier to track using Image packs and thumbnails, however prominence is a factor. Many SERPs have Image packs in the top 100 results by default – placement in the top 10 is a more significant sign, as well as 2 rows of results, or perhaps top 10 rankings from sites like Pinterest.

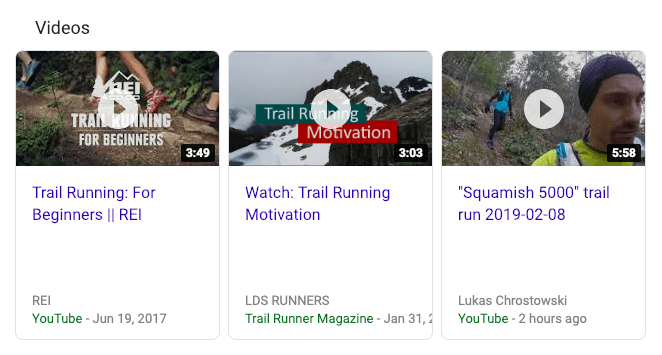

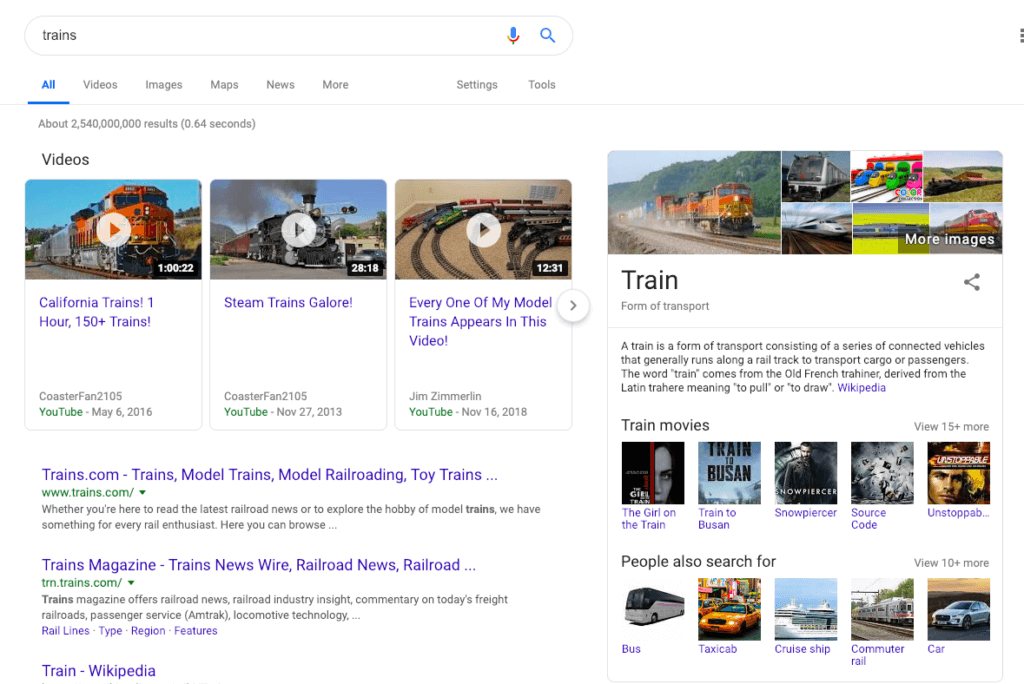

6) Video Intent

Originally I was going to classify Video Intent alongside images as a Visual Intent category, but as I started reviewing more and more search results, it became clear that Video was really it’s own type of intent. Between video carousels, video thumbnails, and even video featured snippets now becoming commonplace, video is becoming critical to ranking for certain types of queries.

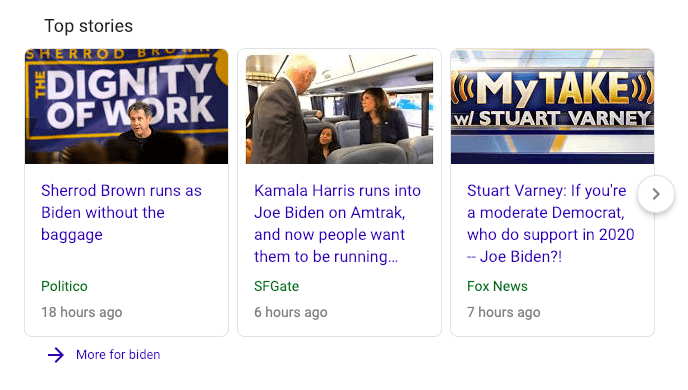

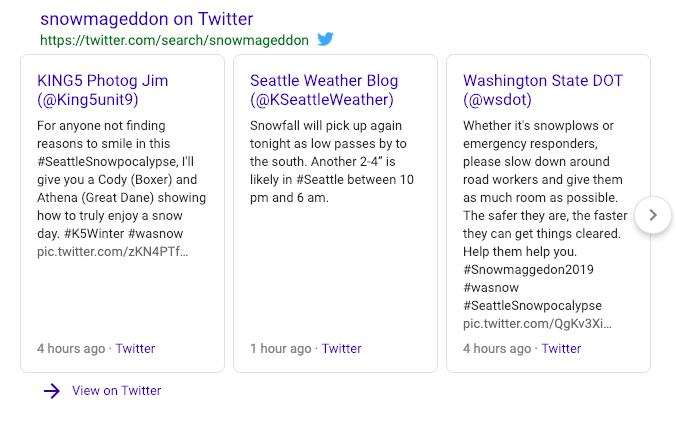

7) Fresh/News Intent

When we see News Intent in the navigation, Top stories boxes, Recent Tweets, and heavy use of recent dates in the past day/week/month in the organic results, that tells us that there is a high volume of content being produced by this topic, and we can probably infer that Google sees higher user interaction with more recent results for this topic.

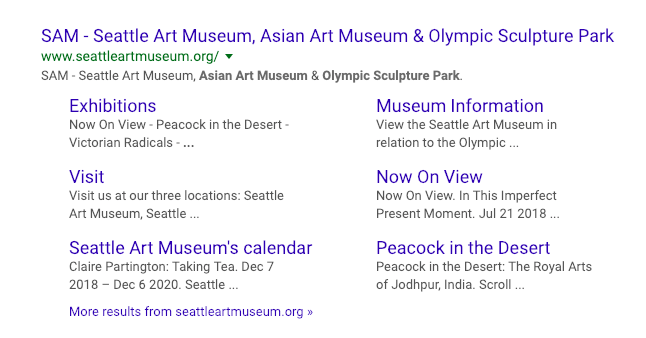

8) Branded Intent

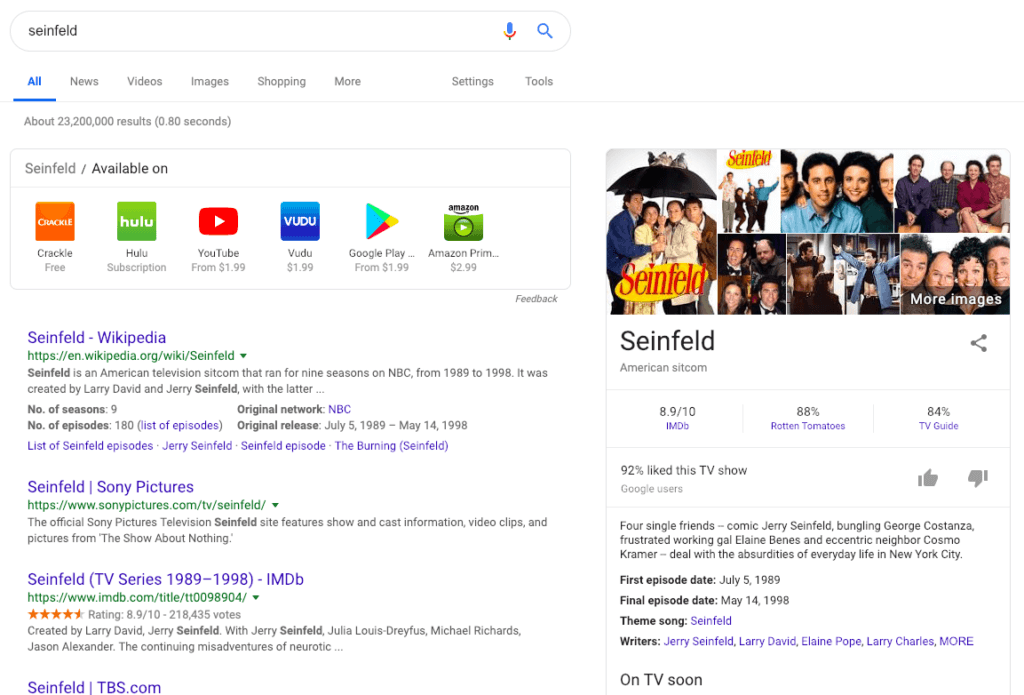

Brand intent queries tend to be dominated by brand homepage results with sitelinks, or domain clustering from a single domain when a keyword includes a known brand entity (eg “walmart TVs”).

9) Split Intent

This last one is more of a “meta intent” type. When we see signs that there are a number of potential intents a user could have, we should be triggering a “Split intent” warning to the user, since these queries are often difficult to rank in if you’re not the first result for a certain intent. EG if you’re not the first Research Intent result, like Wikipedia, your blog post may not have a shot at ranking. This is covered more heavily in the sections below.

While our system is currently focused on the 9 intent classifications, we’ve built our scoring process in a way that allows us to add new intent types in the future. When Google rolls out virtual reality search results, we’ll be ready for it.

How Content Harmony Is Scoring Intent

One of the challenges facing us in search results these days is that it’s very common to find a SERP with multiple potential intent options. So we need a way to indicate overlapping intent.

We also need a way to differentiate between a SERP that contains an image pack at the bottom, with a SERP that contains an image pack in the #1 ranking slot – the Visual Intent of those two searches can’t be scored the same. Intent is not a boolean “Yes” or “No” status – intent can be stronger or weaker on different keywords.

So, we developed our own scoring method.

Publicly to our users, Content Harmony will report search intent uses a scale of 0-3 depending on the prevalence of certain search features.

- 0 – A score of zero means no signs of this intent were found in the search results.

- 1 – A score of one means we saw some signs of this intent type, but they didn’t appear to be very strong.

- 2 – A score of two means we see enough signs to believe this is one of the top 2-3 types of intent for this query.

- 3 – A score of three doesn’t happen on every result we analyze, but when it does you can be sure that this is a clear intent for that keyword.

We report the Primary Search Intent back to the user depending on which type of intent scored the highest on our scale:

Internally, we track somewhere between 4 and 20 different possible signals for each type of search intent. For example, we might have 11 different signals that suggest local intent on a search result. Each of those signals has its own raw score relative to each other, and by tracking each signal that shows up for a keyword, we calculate a raw score. Again, this raw score is entirely internal since it will evolve over time as we add new intent signals.

Our software will support API queries out of the box. An example JSON result for search intent score might look like this:

{ "primary_intent" : "Transactional",

"intent_scores" : {

"Transactional" : 3,

"Branded" : 2,

"Visual" : 1,

"Research" : 1,

"Answer" : 0,

"Fresh / News" : 0,

"Local" : 0,

"Video" : 0

}

}(Formatting is subject to change of course. I’m sure our developer will tell me I missed a semicolon or something).

FAQ & Other Search Intent Considerations

“Isn’t Some of This Actually Formats, Not Intent?”

Good question! Yes, we are mingling format with intent to a degree. I believe that format and intent are heavily intertwined. Formats are simply different type of results and intent is nothing but the user wanting to see a certain type of result, so, they all go hand in hand. Describing intent in relation to format is also a much clearer way to tell SEOs and content writers what type of content they’ll need to produce to meet the intent we’re seeing for that keyword.

A user may not know they’re looking for a video when they search for [how to tie a tie], but good luck trying to rank for that search query without a video if that’s the best format to serve the user’s intent, which is seeing how the hell to tie a half windsor.

A user may not know type the words “image gallery” when they search for [house paint inspiration], but good luck trying to rank for that query without some beautiful custom or user-submitted photography. Detecting visual intent on that search result is critical to understanding what that user wants.

This question of detecting formats vs intent is closely related with my notes earlier regarding SEOs needing to understand intent differently than search engines do. We’re not trying to present the user with a set of results that makes them happy, we’re trying to figure out what the best result is to show Google to reach those users.

“Wait, If Intent Is Always Changing Then What If Your Intent Score Is Wrong A Week From Now?”

Another good question! This is absolutely correct, there are excellent examples out there of keywords where the intent can change daily, or even hourly throughout the day (I’m looking at you, Black Friday SERPs).

This tweet from JR Oakes illustrates this well (follow him, it’s worth it):

Here’s the thing – when intent changes in a way that negatively affects your content’s performance, you should be able to spot that in other tools.

Your week-over-week traffic drop alert in Google Analytics will go off. Your rankings tracker will show you lost the top spot for a keyword that you’ve been #1 on for the past 2 years. Your conversions from that page will be down.

Those are normal daily occurrences in the life of an SEO. And sometimes, your content needs to change, too!

When that happens, sometimes you’ll be able to look at the search result and see what is happening (eg a competitor with similar content took over your spot). But sometimes you won’t be quite sure what has changed, and running a new report for keyword intent is the correct way to compare before and after if you previously ran a report for that keyword.

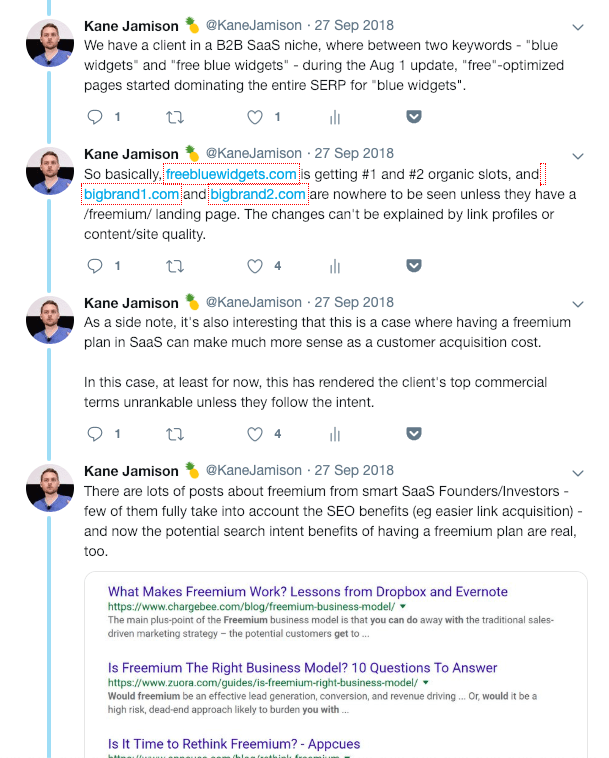

We have an interesting example from the August 1, 2018 algorithm update of how this affected a B2B SaaS Client we used to work with. I tweeted about it in this thread:

How We’re Determining Split Intent

If a SERP scores 2 or 3 in a number of areas, that would be an appropriate time to report “Split Intent” to the researcher.

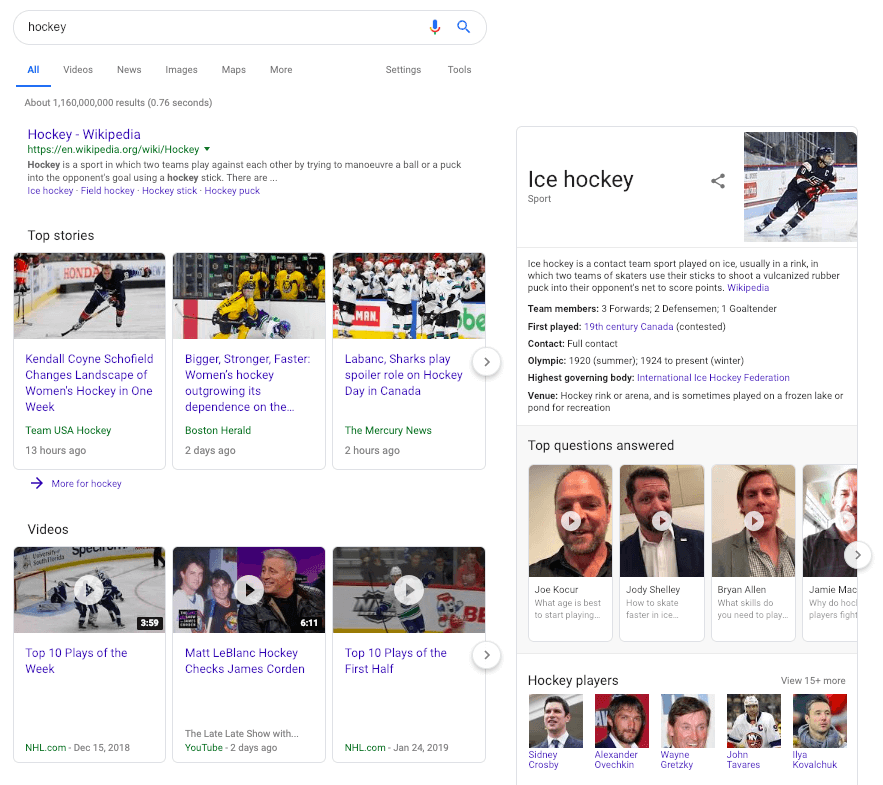

For example, in the [hockey] example below, we see multiple results indicating intent that would score 3 on our scale:

- Fresh Intent and news results

- Multiple Research Intent signs from Wikipedia, knowledge graph boxes, and PAA results.

- Multiple Visual Intent signs from video packs, and Image Video intent suggestions in the navigation.

- Multiple Local Intent signs from a local pack in the top 20 results, and localized organic results such as SeattleThunderbirds.com in my area.

In this case it would be misleading to an analyst to call this a Fresh Intent query or a Research Intent query. I would report this as a Split Intent keyword, and perhaps tell the user a ‘secondary intent’ of Fresh Intent.

How We’re Handling Unknown Intent

There will always be “10 blue link” search results where the intent just isn’t clear to us. We’re currently deciding between returning a primary intent of “None” or “General” in these cases and we’d be open to feedback.

I feel that “General” indicates the intent is unclear, but it’s different than a nil result of some type where data was missing.

Want To Take It For A Spin?

We now offer full access to standalone search intent reports inside of Content Harmony's software.

Bulk data pricing and service options will be released during 2021.

Schedule a demo to learn more or see if we can solve your use case.

Want to see how Content Harmony helps you build content that outranks the competition?

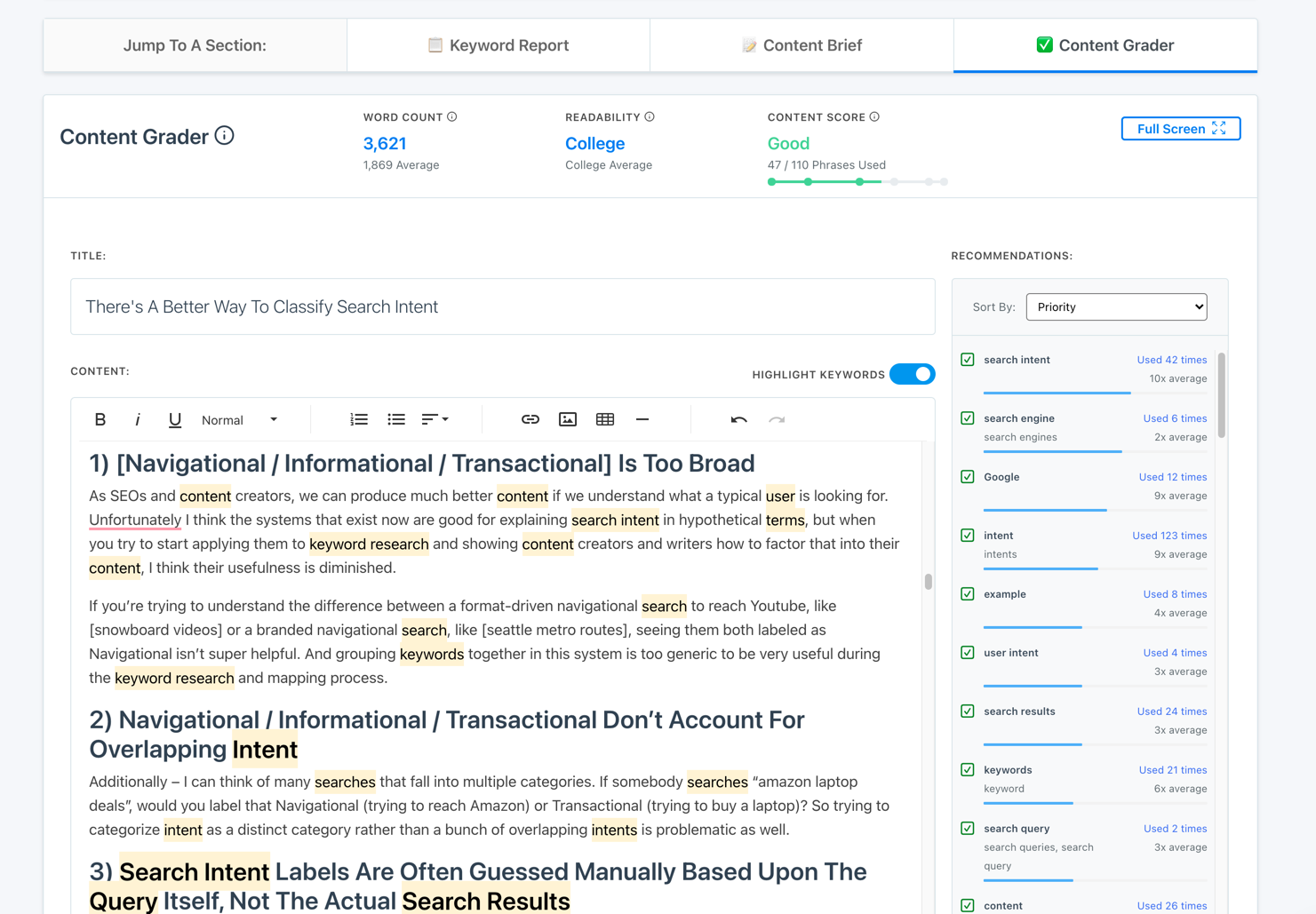

The blog post you just read scores Good in our Content Grader for the topic "how to classify search intent".

Grade your content against an AI-driven topic model using Content Harmony - get your first 10 credits for free when you schedule a demo, or sign up here to take it for a spin on your own.

✉️ Get an email when we publish new content:

Don't worry, we won't bug you with junk. Just great content marketing resources.

Ready To Try

Content Harmony?

Get your first 10 briefs for just $10

No trial limits or auto renewals. Just upgrade when you're ready.

You Might Also Like:

- The Wile E. Coyote Approach To Content Guidelines

- Content Brief Templates: 20 Free Downloads & Examples

- The Keyword Difficulty Myth

- How To Find Bottom of Funnel (BoFU) Keywords That Convert

- Bottom of Funnel Content: What Is BOFU Content & 10 Great Examples

- 20 Content Refresh Case Studies & Examples: How Updating Content Can Lead to a Tidal Wave of Traffic 🌊

- How to Create Editorial Guidelines [With 9+ Examples]

- Content Marketing Roles

- How To Write SEO-Focused Content Briefs

- The Content Optimization Framework: [Intent > Topic > UX]

- How To Update & Refresh Old Website Content (And Why)

- 12 Content Marketing KPIs Worth Tracking (And 3 That Aren't)

- 16 Best Content Writing Tools in 2024 (Free & Paid)

- How to Create a Content Marketing Strategy [+ Free Template]

- How To Create Content Marketing Proposals That Land The Best Clients

- What Is A Content Brief (And Why Is It Important)?

- How To Create A Dynamite Editorial Calendar [+ Free Spreadsheet Template]

- How to Use Content Marketing to Improve Customer Retention

- Types of Content Hubs: 5 Approaches & 30+ Examples

- How To Do A Content Marketing Quick Wins Analysis